REU PROJECT (2024)

Assistive Light Projection

Hybrid Workflows Track

Description

While head-mounted displays (HMD) in AR applications show promise in creating immersive environments, their ability to facilitate collaborative interactions is inherently limited due to the cost of supporting multiple users. Spatial augmented reality such as light projection user interfaces (LPUIs) aims to augment the physical environment, as opposed to just the viewpoint of a single user, and is preferred by users of HMDs in applications such as augmented manufacturing and have been used to augmenting system status information onto a 3D printer to provide hardware support.

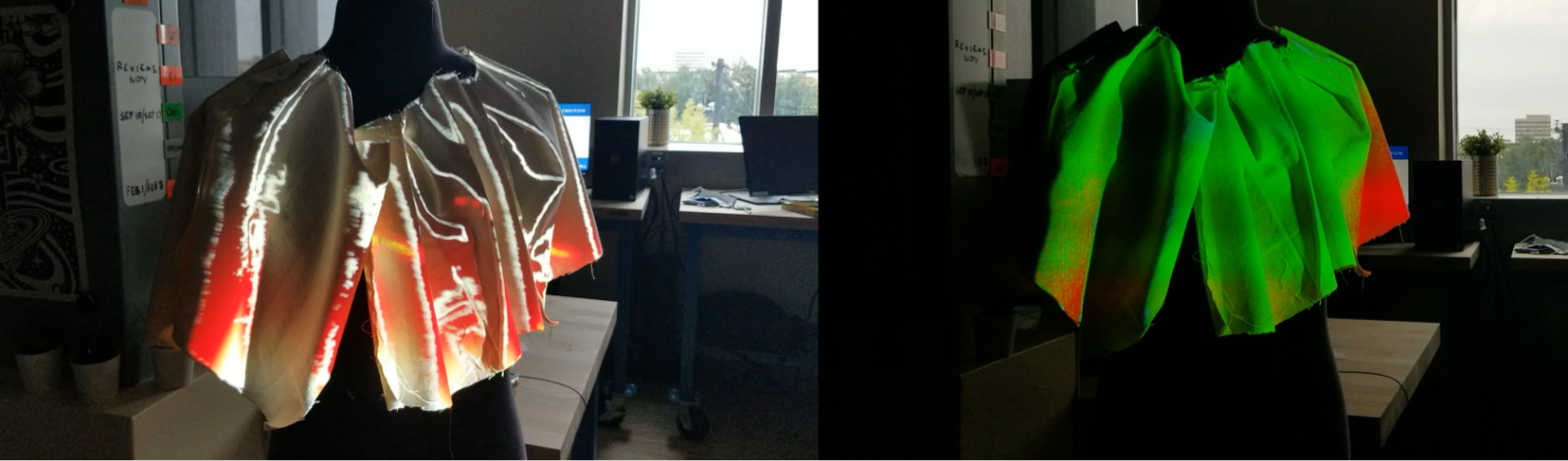

Current structured light projectors (e.g., LightForm) provide Photoshop-like design software for adding dynamic textures onto specific surfaces on a scene; however, the ability to prototype LPUIs remains limited. To develop a library of LPUI components and interactions, we situate this work within a physical drawing studio. Project goals include:

- Leveraging the Lightform projector to rapidly prototype different assistive cues onto still life objects, armatures, or human figure models. These projected cues assist the user in training the eye to understand proportions, human anatomy, foreshortening, perspective, and tonal placements;

- Assessing the efficacy and usability of these guides in a Wizard-of-Oz user study.

- Investigate a a vocabulary of computer vision processes to allow users to customize projected light cues (e.g., automating the placement of proportion markers and guides on a digital skeleton)

- Using a plug-and-play depth camera, synthesizing the above outcomes into a declarative domain-specific language for supporting LPUIs.